Do You Really Want A Driverless Car?

Driverless cars may create unexpected problems.

-

Tools:

Some people truly love the automobile, enjoying their time behind the wheel even when there's another stopped car three feet in front of their bumper.

For the rest of us, the idea of a self-driving car that whisks us from place to place while we do something more productive and less stressful is a fair approximation of Heaven. Checking email, playing a game, even just taking a nap - think of all the time wasted actively driving your commute that could be put to some better, or at least more pleasant, use!

Billionaires from Elon Musk on down are working hard to make that possible. And let's face it - on a long trip, leaving the driving to Robby the Robot can't help but be a good idea. Nobody likes staring at mile after mile of boring freeway.

When it comes to your commute, though, we may be overlooking a minor detail - a small fact that's also led to a good many minor, individually insignificant accidents, that collectively add up to a big problem:

While the public may most fear a marauding vehicle without a driver behind the wheel, the reality is that the vehicles are overly cautious. They creep out from stop signs after coming to a complete stop and mostly obey the letter of the law — unlike humans.

Time In the Car vs Time In Bed?

As human beings, we make conscious choices as to our every action, particularly in a car. We all know the speed limit and stop sign laws. We also all know, to a pretty good accuracy, how they are actually enforced in our area.

Blowing through a stop sign is illegal, dangerous, and a bad idea. What about a "California roll," though, where the driver slows down to 5mph and then cruises through? That's certainly slow enough to instantly stop if there's a reason to, but preserves valuable momentum, reduces the pollution from a hard acceleration, saves fuel, and generally Saves the Planet.

It's extremely unlucky, though possible, that you'll get ticketed for "failure to stop" in such a case. Most of us make the judgement that the risk is worth the time and gas we save.

Self-driving cars, though, don't: they must be explicitly programmed, and that programming can be subpoenaed in a lawsuit. It's essential that what's in the code match religiously with the letter of the law. So autonomous vehicles will always come to a full and complete stop at every stop sign, regardless if it's midnight and there are no headlights visible for miles in any direction.

Then there's speed limits. Does anyone obey the speed limit, ever? At the very least, the speed of traffic is "rounded up" to the nearest whole number; more commonly it's "7 over" that's thought to be safe from citation.

Now, there are those particular speed traps where extra care is in order, but most of us know where they are in our immediate neighborhoods and treat them with the respect they deserve. A computer can't know this - it literally can't, because any car company which tried to program in such information would be instantly sued into bankruptcy. For the same reason, the autonomous vehicle must precisely observe the speed limit, deafly ignoring the dozens of angry fellow travelers piling up behind.

How about stoplights? Running a red light is asking for death. A self-driving car will courteously brake for an impending yellow light, though, when 99% of human beings will floor it to get through the intersection before it changes and they're stuck waiting.

How much of a difference this will make to your personal commute depends on a lot of things, but the deeply conservative approach of an autonomous vehicle could easily increase your drive time by 25% all told.

Of course, when the technology matures, you personally won't have to do that driving; you can be doing something else in the car, and maybe it will be worth it. For the same reason, many people take the train to work even if it takes a bit longer, because they can just sit there and be carried along.

Those of us with more aggressive personalities won't be amused by a car that frustratingly slows down for a yellow when we "know" it could make the light if only it would try! It may be more aggravating cussing out the car we're in than to just do the driving ourselves.

A reliable self-driving car would be a fantastic technical achievement, and it'll certainly find a market for long-haul drivers of all kinds. For the daily commute, though, it may be longer than Elon Musk thinks before everyone will adopt his pride and joy.

-

Tools:

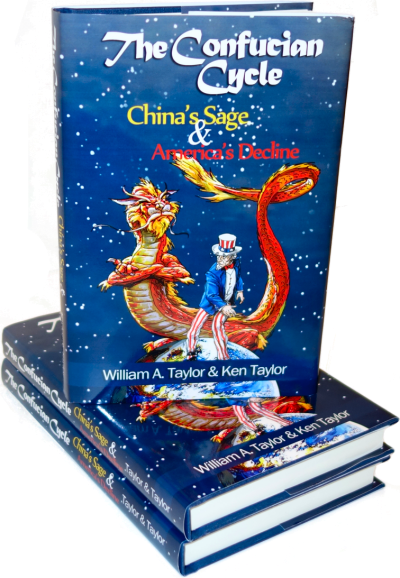

What does Chinese history have to teach America that Mr. Trump's cabinet doesn't know?

Petrarch is usually right on the mark, but this time we need to look over the horizon to a truly driverless car environment: The cars will communicate with each other and a central 'neural network' that will guide the cars for optimum efficiency. There will be no traffic lights or stop signs. A car approaching an intersection will know if it needs to stop or not. The same goes for speed limits which can be much higher when human error can be removed from the equation. This utopian view will take a while, but some technologies will not be deterred.

For a vision of this world, the movies "Minority Report" and "I, Robot" have excellent portrayals. It works great as long as everything is smooth and right. As soon as anything goes wrong, either in the control system or via an oppressive government, ordinary people lose their independence and freedom of motion very quickly. Do we want to be entirely dependent that way?

I agree with Patience and wish to take her comments a step further. A "self-driving" car actually is a car that somebody else is driving for you. That opens a whole can of worms, because that somebody has the power to lock your doors and drive you to some place to which you do not want to go. All the interlocking power of the digital world can easily give Big Brother the knowledge that you--in your car--are the guy who posted a non-politically correct comment on Facebook 5 years ago. That's not good. I don't want Big Brother even in the back seat of my car, let alone the driver's seat.

Patience & Kit: I agree with your concern about Big Brother. However, by the time driverless cars arrive as described, Big Brother will be so entrenched in our lives that where the car is taking you will least of your problems.

I has been well documented that most people are all to willing to grant their privacy to an all-knowing internet and the collection of their data in exchange for the convenience of technology. What happens between now and the advent of driverless cards will most likely reinforce that conclusion.

How about a comment from someone who actually OWNS a semi-autonomous car? And no, it's not a Tesla -- their AutoPilot and intrusive tracking systems scare me.

My car has ~48,000 miles on it now. About 95% of that was driven with the car doing the driving. When I say doing the driving, I mean acceleration, braking, and steering were all under the car's control. NHTSA rules prevent my car from following a route, but the technology's there.

In practical terms, my car's much like riding a horse. It knows enough to keep itself out of trouble, but good luck getting the horse to understand that you want to go to Costco. You point it in the direction you want to go and it'll continue to do that until you tell it otherwise. At highway interchanges, if you're in the right lane, it'll ask you if you want to exit or continue straight. A gentle nudge of the steering wheel to the right lets it know you want to exit the freeway. It actually does better in congested city traffic than on the open highway. There, it has plenty of cars around it and is better able to determine what the right response might be based on the other drivers.

The longest stretch I've had of pure autonomous driving (no hands, no feet) was a little over an hour in heavy traffic. Instead of being frustrated like other drivers, I jut put on a Stan Getz live concert from the 1960s on the surround sound & video screen, turned on the seat massager, and kicked back. For a brief moment, I almost felt guilty...then quickly drifted back into a state of relaxed bliss.

Petrarch mentions cases of cars strictly obeying traffic laws and speed limits. At least for mine, paired with Bosch Mobility Solutions' systems, it behaves much more like me than a robot. It'll gladly drive well past the speed limit, up to 120mph. It doesn't always get red light timing right, especially if the person behind me isn't slowing down. It's never blown through a red light, but there've been a few times when the light was changing as the car was going through the intersection. I've found this happens most often in areas where they've installed red light cameras and SHORTENED the yellow light duration. A peculiar trait the auto drive system is that it'll keep driving slower and slower if someone's tailgating the car. I'm not sure if it picked this up from me (as I do this) or if it's programmed that way. Either way, it makes for a good chuckle inside the cabin to know the jerk tailgating is fighting a computer.

To date, the car's avoided 3 accidents: #1) I almost hit a deer in the middle of the night. The car's radar picked up on it before I saw it and already started responding by the time went reaching for the brake pedal. #2) I was stopped at a red light, behind a van. The car had been stopped for a good 10 seconds or so, and suddenly the car roared to life and jumped into the right turn lane to get out of the way. The guy behind me wasn't stopping and ended up hitting the van in front of me. #3) I was hand-driving the car and traffic on the interstate was coming to a quick stop. I started stopping very aggressively to avoid the car in front of me. Suddenly the brake pedal got soft, my seatbelt tightened, seat grabbed me, and I heard tires screeching behind me. The pickup truck driver ended up swerving at the last minute and grazed the concrete barrier in the breakdown lane. I got out to check on him and he was fine. He noted that he would have plowed right into me (distracted) except that my car started strobing it's tail lights.

I should note, my car is *NOT* internet-connected -- we crippled that feature during the first week.